Psychologists have been better at measuring intelligence than explaining how they do so. “The indifference of the indicator” is all very well, but this dictum has been met with public indifference and incomprehension. This is because psychometricians keep saying that intelligence matters, but then put their foot in it by saying “but how you test it doesn’t matter”. Technically, this is correct: it does not matter precisely what the test is, so long as it has sufficient difficulty to stretch minds and grade them. In that sense the actual indicator of intelligence is a matter of indifference, but only so long as it has the necessary psychometric properties.

I try to get round this problem of understanding by giving the example of digit span: remembering digits forward is easy (and only weakly predictive of general ability) but remembering digits backwards is harder (and more strongly predictive of general ability). In that difference lies the essence of difficulty.

http://drjamesthompson.blogspot.co.uk/2014/03/digit-span-modest-little-bombshell.html

It then gets rather technical. Some tests are good indicators at the lower end of ability, others at the higher end. They all have characteristics and quirks. Hence the reification of intelligence test results into g which satisfies most researchers but bemuses the general public.

Compare this with the forced expiratory volume test.

Forced expiratory volume (FEV) measures how much air a person can exhale during a forced breath. The amount of air exhaled may be measured during the first (FEV1), second (FEV2), and/or third seconds (FEV3) of the forced breath. Forced vital capacity (FVC) is the total amount of air exhaled during the FEV test.

Neat, isn’t it? (You can then study whether 30 mins of aerobic exercise over 8 weeks raises the volumes. It does, a bit.) Can psychometrics define an intelligence measure in as simple a way?

Diego Blum, Heinz Holling, Maria Silvia Galibert, Boris Forthmann. Task difficulty prediction of figural analogies. doi:10.1016/j.intell.2016.03.001

The purpose of this psychometric study is to explain performance on cognitive tasks pertaining Analogical Reasoning that were taken into consideration during the construction of a Test of Figural Analogies. For this purpose, a general Linear Logistic Test Model (LLTM) was mainly used for data analysis. A 30-itemed Test of Figural Analogies was administered to a sample of 422 students from Argentina, and eight of these items were administered along with a Matrices Test to 84 participants mostly from Germany. Women represented 77% and 76% of each respective sample. Indicators of validity and reliability show acceptable results. Item difficulties can be predicted by a set of nine Cognitive Operations to a satisfactory extent, as the Pearson correlation between the Rasch model and the LLTM item difficulty parameters r = .89, the mean prediction error is slightly different between the two models, and there is an overall effect of the number of combined rules on item difficulty (F(3,23) = 15.16, p < .001) with an effect sizeη2 = .66 (large effect). Results suggest that almost all rotation rules are highly influential on item difficulty. (my emphasis).

Figural matrices are a good test of intelligence. Raven dreamed his up from logical principles, using patterns he had seen on pottery in the British Museum. His test works very well, even though one difficult item among the 60 is placed a little too early in the B sequence. Incidentally, to my mind this placing error is one of the proofs that the test is reasonably culture fair, in that all racial groups find it difficult, without having to confer across continents about it.

Tests of this sort are known as the A:B::C:D analogies (A is to B as C is to D). When a problem is based on finding the missing element D of the analogy (I.e., A:B::C:?), then C:D becomes the target analog and A:B becomes the source analog. What needs to be extrapolated from one domain to the other is the compound of structural relations that binds these two entities, and not just superficial data (Gentner, 1983). The basic problem A:B::C:? can be applied to different types of contents, namely: verbal, pictorial and figural (Wolf Nelson & Gillespie, 1991).

How does one describe the difficulty level of each item? Mulholland, Pellegrino, and Glaser (1980) studied the causes of item difficulty in geometric analogy problems, and concluded that the number of item elements, as well as the number of transformations, had a significant effect on error rates.

These authors decided to build a test with designed levels of item difficulty, and chose to keep the same standard figures in all items, so as to reduce surface complexity and concentrate on underlying operational differences between items. They used 9 main rules to build the items, rotating the figures by 45, 90 and 180 degrees, using X and Y axis reflections, line subtractions and dot movements. You can call this: “How to build your own IQ test” and the supplementary material shows you how to do this. Note that certain rule combinations lead to some imprecisions and, therefore, the process of rule-based item generation should not be considered a pure-mechanical procedure. As a consequence, the authors have further explanations about their design guidelines which need to be understood

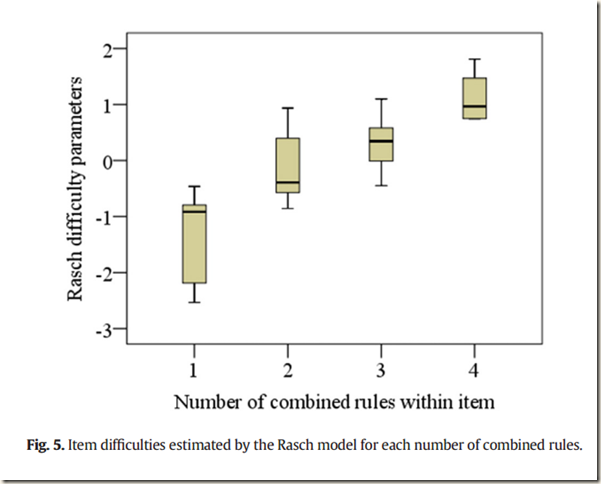

Based on the data provided in Table 2, specific rule-based contributions to item difficulty can be interpreted. The short clockwise main shape rotation, the subtraction and the dot movement rules make some contributions in this regard. Most interestingly, the best predictors of item difficulty are all the other rotation rules (I.e., both counter clockwise rotations, both long rotations, and the short clockwise trapezium rotation), followed by the reflection rule. Special mention must be given to the long clockwise trapezium rotation, which has the biggest influence on item difficulty. In other words, people found it most difficult to manipulate rotations during task resolution. In fact, the two easiest items according to the Rasch model (items 2 and 4) do not comprise rotation rules, nor does item 25 which is the 7th easiest item. Also, combining rules within a single item has an impact on item difficulty by itself, since both the ANOVA results and the Box Plot show that the higher the number of combined rules, the greater the item difficulties.

I am aware that some of this has been done before, if only because I attended conferences years ago showing that an intelligence test could be constructed out of general principles of learning, and that it had good predictive value.

I think that this is a good paper which should be mentioned whenever critics assume that test material is arbitrary and unrepresentative in some way. This work establishes that rules of design complexity are strongly associated with the ease or difficulty human subjects experience when they solve problems.

One fly in the ointment: it seems that psychology is now 76% a girly subject and women are less good at mental rotation of shapes, so it might be good to check this with boys studying something other than psychology.

The authors found that this test works well at low as well as high levels of ability, which is particularly useful.

A high positive correlation (r = .89) reveals that item difficulties are strongly associated with the predicted difficulties of each rule, and these item difficulties remain practically unchanged in a further study.

By wary of comparison only, the test-retest correlation of the Wechsler after 6 months is 0.93, so the above correlation of 0.89 is a very strong endorsement of the design principles of the test created by the authors.

Perhaps we have taken a step towards finding out what makes problems difficult.

Take a closer look at the paper here:

https://drive.google.com/file/d/0B3c4TxciNeJZcWZiUmpfRm50VlE/view?usp=sharing

Psychology of worker/semi-slaves* all the time,

ReplyDeletejust do not see because you don't want.

https://upload.wikimedia.org/wikipedia/commons/b/b8/Camp_ArbeitMachtFrei.JPG

ReplyDelete"A 30-itemed Test of Figural Analogies was administered": God, that must be boring to do. How does anyone retain the interest to bother finishing it?

ReplyDeleteSeems there are three types of ADHD

ReplyDeletefirst type, the classical: combo mental+physical hyperactivity und attention deficit,

second type, the potential sportsman: physical hyperactivity und attention deficit,

third type, the potential creative genius: (only) mental hyperactivity und attention deficit.

mental hyperactivity is not exactly the same than neuroticism because neuroticism tend to imply in higher density of negative thinking while mental hyperactivity would be more heterogeneous, with both, highly density of positive, neutral and negative thinkings.

or just other bullshit...

For ADHD there should not be much biological difference between those born in August and September. Yet see the graph in

Deletehttps://jasperandsardine.wordpress.com/2016/03/11/profiteering-racket-adhd-is-vastly-overdiagnosed-and-most-children-are-just-immature-say-scientists/

"ADHD Is Vastly Overdiagnosed, And Most Children Are Just Immature Say Scientists"

dux.ie

The fact that there is a overdiagnosis don't mean that don't exist adhd.

DeleteYou can have a immature child who manipulate others to no have responsibility about their attitudes AND without any attention déficit ( i mean, above average) and you can have a child (or adolescent or adult) who are immature and really distracted.

Other very pertinent question is: what type of attention deficit we are talking about**

empathetic attention deficit many times result in anti-social personality OR in pseudo-anti social personality (combo: stupidity + subconscious selfish behavior, we have two types of assholes: the very conscious of their attitudes and those who are very perceptively dumb to understand and weight their own attitudes).

in my case, i have mundane attention deficit, what i want to say, i no have any strong motivation to internalize well technical activities that most ordinary people to do naturally.

Adhd ''don't exist'' technically talking because what we identify as psychological pre-morbid or morbid conditions indeed are intense homogeneity of certain or specific''traits'' or just intensity or lack of it like hyper-activity or even hypo-activity. ''average=normal''

Many times a lot of people are just disadjusted to certain environment, disadjusted is not exactly the same than ''mentally ill''.

There are some speculations.

ADHD is just the type who are in the dead end of ''tolerance for school regulaments'' and other ''obligatoriness.

ReplyDeleteAdhd is a archaic version of humankind because they (i'm little bit like tha') to do what they want, similar with non-human animals, it's not a offense.

Domesticated people internalize civic and not-so-civic regulaments while adhd on average, seems, to do what they want to do and specially during the school, in other words, they (und me) are LESS domesticated.

For those interested in Rasch measures, which put item difficulties and test-taker abilities on the same scale, allow all arithmetic operations ( *,/,+,-, rather than at most + and - for IQ)and form the basis for item response theory (IRT) in general, this textbook is the best free online resource that I have found:

ReplyDeleteMeasurement Essentials

Here is a practical slideshow walk-through showing how Rasch measures ("W score")were used in the development of the Woodcock-Johnson IQ test:

Applied Psych Test Design: Part C - Use of Rasch scaling technology . The Stanford-Binet, also published by Riverside uses the same scale ("change-sensitive" score or scale "CSS"), which has as its only arbitrary choice setting the CSS for an average 10-year old equal to 500. Since division is a valid operation on scores on this scale, one can say that in an absolute sense, the average adult with a score of 510 to 515 is only 2 or 3% more intelligent than the average 10 year-old, and less than 10% smarter than the average 5 year old with a score of 470.

Unfortunately Riverside seems reluctant to publish the average age- vs. CSS or W-score graphs for the full test, let alone for different standard deviations, but slide 19 of the slideshow does just that for the WJ block rotation sub-test, which is likely pretty close to the full-scale results, though likely with smaller variance than the full test. (Assessment Service Bulletin Number 3: Use of the SB5 in the Assessment

of High Abilities, p.11 of the PDF says the top full-scale score observed on the SB5 was 592, whereas the block-rotation subtest (BR) has an adult mean of about 508 and a s.d. of about 8.5, which would put a 592 nearly 10 s.d. out, which is rather unlikely, so the actual s.d. for the full scale must be larger. The distribution is also likely log-normal or otherwise fatter-tailed than normal.) So using BR as a proxy likely understates the differences between subjects on the full test, but even so, the difference between +3s.d. and average adults is larger than between average adults and average 5 year olds. The BR score of a +3 s.d. 5 year old is about the same as a +0.5 s.d. 22 year-old, which would be about what I would expect the typical graduating psychology major to score. There are many other such comparisons; I have enjoyed hours playing with that chart. (I have an improved .png version rescaled in years rather than months, with a background grid, and also a Paint.NET version separated into layers for easier analysis, if anybody wants it.)

I'd be very interested in finding similar charts for a full-scale test, fluid / crystallized scales or any other sub-tests.

-EH / savantissimo

(FYI, Wordpress is acting even more messed-up than usual.)

This is very interesting. If you have links to your calculations, or a summary of the results, I would like to see them to understand the argument further.

DeleteI'll post on my long-neglected blog and link as soon as I can.

DeleteThere isn't much in the way of interesting calculations - using the graph from slide 19 linked above opened in a paint program (Paint.NET, which is free), I use the line tool to measure distances on the graph. The line tool also shows the angle of the line so you can be sure the distance measured is vertical or horizontal. By measuring the distance between the marks on the vertical scale (labeled 470 and 510, so that distance = 40 CSS points), I find that the marks are 91 pixels apart, so each vertical pixel is 40/91 of a CSS point( ~=0.44 pixels/CSS point). Using the same method, I find (412 horizontal pixels)/(300 months) ~= 1.37 pixels/month ~= 16.5 pixels/year.

The graph in slide 19 shows the Rasch scores on the block rotation subtest from age 2 to 25 for the average as well as for each standard deviation +/- 3 s.d., so allows comparing the absolute intelligence of people with different ages and z-scores.

For those who haven't looked at the graph, the average score appears to go up as the logarithm of age, rising quickly at first, then more slowly with greater age. The standard deviations are widely spaced at first, but become tightly spaced by age 9, after which they again diverge, with the upper standard deviations scores rising more rapidly while the lower ones are almost flat.

The SB5 service bulletin #3 has on page 12 of the PDF a reprint from the SB5 interpretive manual of the average full-scale CSS scores (table 4), which closely matches the average line in slide 19, so the block rotation subtest average scores vs. age should be a reasonable proxy for the full scale, (though as I said the standard deviations on BR are likely somewhat smaller than the FS).

Using a horizontal straightedge on the graph allows equating a given CSS score to z-scores at different ages. The Mk.I eyeball gives a pretty decent estimate of fractional z-scores falling between the s.d. lines, but one can use the line tool to get better measurements of the z-score that equates to a given CSS at a given age.

Here is that updated graph with what I hope is a more coherent explanation: Converting IQ at a Given Age to an Absoloute (Rasch) Measure of Intelligence

ReplyDeleteThank you. Will read

Delete